Explainable AI (XAI) and Interpretable Machine Learning are two growing areas in the field of technological and AI research which will boost the diffusion of AI tools in the companies decision processes.

In this article, we will explain in detail what Explainable AI is, how it was born, and why it can be a decisive factor for the adoption of AI systems in companies.

From Artificial Intelligence (AI) to Explainable AI (XAI)

Artificial intelligence will change our way of life by managing many of the processes that today are carried out manually with tools and technologies that only a few decades ago seemed to belong to science fiction scenarios.

The largest companies in the world have now understood the potential of this discipline and are investing huge economic and human resources for the development of “intelligent” systems that are able to revolutionize their business. However, some companies still show doubts about the impact and risks of introducing AI systems in business logic.

This attitude stems from several factors ranging from the lack of specialized internal skills, to the distinct perception of the potential of these techniques, to the fear of delegating important decisions for the company to a black box. Also to address this last problem, in recent years, many researchers have developed new tools with the aim of both developing alternatives to “black box” systems and designing methods for the a posteriori analysis of existing algorithms.

Thus a new research area was born, called “Explainable Artificial Intelligence” (XAI), which studies and designs algorithms whose choices are understandable by human beings and described by cause-effect relationships.

Interpretability and Explainability

A first step to make the operation of an Artificial Intelligence system more understandable is to adopt models that are intrinsically interpretable. The interpretability of a model is defined as the degree to which a human can regularly predict the result.

In other words, when we adopt an interpretable model, the human user can have strong awareness of how the model would behave with a given input. Building an Explainable AI model, on the other hand, allows an additional level of understanding of the system.

More specifically, through Explainability we mean the possibility of understanding and quantifying the cause-effect relationships that lead to an output and to extract particular information gathered from the model. In summary, if Interpretability allows us to understand how the model reaches a certain output (How), Explainability also allows us to grasp its deeper motivations (Why).

Why Explainable AI?

Explainability provides to AI systems different properties that significantly impact on several aspects and make them preferable to black boxes for managing the most critical processes:

- Knowledge. The explainable models allow you to extract valuable information from the data by providing a representation of the cause / effect relationships between the variables involved that normally escape human analysis;

- Trust. Decisions made by an explainable model are more easily accepted by human operators as the process that generated them is transparent;

- Robustness. Typically, explainable models are also robust, i.e., small variations in the input do not cause huge output variations;

- Safety. In explainable models, we can adjust the mechanisms that generates the output, preventing the model from making too risky or even catastrophic decisions;

- Fairness. The adoption of these models allows us to be sure that there is no bias in the dataset.

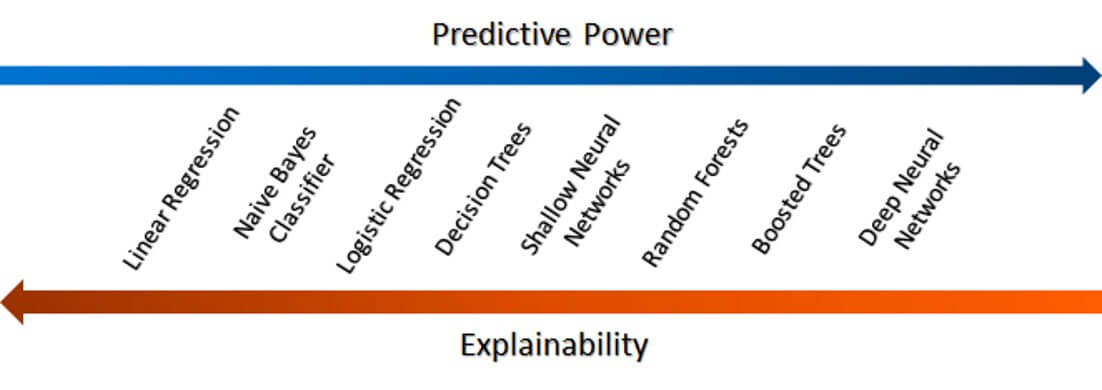

Blackbox vs Glassbox

Thanks to their transparency, XAI-based systems have been defined as “glassbox”, as opposed to the blackbox, whose choices are “incomprehensible” to users. Obviously, the other side of the complexity of black box models is a greater expressiveness that allows you to solve very difficult tasks and describe very complex processes.

However, it is not uncommon for systems running XAI to achieve performance comparable to blackbox systems in many contexts. In summary, the choice between blackbox and glassbox must be carefully evaluated by experts who take into account both users’ requirements and the nature of the problem to be solved.

Example: Autonomous Driving

Autonomous driving is a very complex problem in which both large companies and the scientific community are investing heavily. The difficulty in implementing systems capable of performing this task is due to several factors.

First, an autonomous vehicle must simultaneously perform multiple sub-tasks at different levels of abstraction. Furthermore, learning these tasks requires a learning process that requires a large amount of data that is able to describe many driving scenarios. At the same time, the behavior of an autonomous vehicle must favor safe actions that do not put the passenger at risk.

However, it is not trivial to provide in the training phase all the scenarios necessary to describe the right policy to be adopted in risky situations. For this reason, for high-level tasks, the adoption of XAI approaches may be the right solution to make the system output predictable and therefore more secure. In particular, XAI techniques suitable for analyzing the behavior of existing methods are able to evaluate the behavior of the developed system when the parameters entering the system vary.

For example, a system that bases its braking behavior based on atmospheric temperature could fail under extreme conditions, due to the fact that those conditions are not present in the data used to train the system. The XAI techniques, on the other hand, allow us to evaluate the impact of abnormal temperatures on the functioning of the system.

For more information:

- Christoph Molnar Interpretable Machine Learning, A Guide for Making Black Box Models Explainable (2020)

- Miller, Tim. “Explanation in artificial intelligence: Insights from the social sciences.” Artificial Intelligence 267 (2019): 1-38.

- Murdoch, W. James, et al. “Interpretable machine learning: definitions, methods, and applications.” arXiv preprint arXiv:1901.04592 (2019).